Dab Tech Solutions

DHIS2 SIEM automation

One of the aspects of a mature security posture is how prepared the organization is to detect and response to cyber threats: due to a combination of factors that make cyber attacks easier to carry on and therefore more common, build the system to detect promptly such attacks and respond appropriately becomes more and more an urgent and early necessity.

That is why we have built an automatic Security Information Event Management (SIEM) system for DHIS2 on lxd server infrastructure in dhis2-tools-dab: by the introduction of the new features it was easy to develop a new container of type es_siem, bringing the power of ElasticSearch into the platform.

SIEM & ElasticSearch

ElasticSearch has been a reference in the log collection space for awhile and its ease of installation and management, thanks also to the web user interface named Kibana, made for us the default choice for this system, on top of the well known reliability, flexibility and the great community behind the project.

Although ElasticSearch was born as a log management solution, it’s in the right position to offer a good security alerting system, although some of their best features on this regard comes as a paid subscription (more on that on the Limitations section).

Said that, a SIEM solution should provide flexible rules creation, notification mechanisms and easy to consult alerts, all things ElasticSearch does well, for free.

Setting up a SIEM on DHIS2

So let’s dive into the setup and some first impressions.

This setup is performed thanks to the es_siem container type introduced with dhis2-tools-dab.

You can take a look at the es_siem and es_siem_postsetup files to know more about how the system is setup and replicate it manually.

The postscript configures in each container journald system, which centralises logs into a file that it’s then parsed by the filebeat agent, which ultimately sends the data to ElasticSearch for storage and analysis already iin JSON format.

To get started, let’s add the container type entry in the containers configuration file.

dab@battlechine:~$ sudo cat /usr/local/etc/dhis/containers.json

{

"fqdn":"192.168.130.130",

"email": "davide@dab.solutions",

"environment": {

"TZ": "Europe/Madrid"

},

"network": "192.168.0.1/24",

"monitoring": "munin",

"apm": "glowroot",

"proxy": "nginx",

"containers": [

{

"name": "proxy",

"ip": "192.168.0.2",

"type": "nginx_proxy"

},

{

"name": "postgres",

"ip": "192.168.0.20",

"type": "postgres"

},

{

"name": "siem",

"ip": "192.168.0.200",

"type": "es_siem"

}

]

}As you can see, the new section named “siem” has been added: when running ./create_containers.shthe new and missing container is identified and created:

dab@battlechine:~/dhis2-tools-ng-dab/setup$ sudo ./create_containers.sh

Skipping adding existing rule

Skipping adding existing rule (v6)

Skipping adding existing rule

Skipping adding existing rule (v6)

Reading package lists... Done

Building dependency tree

Reading state information... Done

auditd is already the newest version (1:2.8.5-2ubuntu6).

apache2-utils is already the newest version (2.4.41-4ubuntu3.13).

unzip is already the newest version (6.0-25ubuntu1.1).

jq is already the newest version (1.6-1ubuntu0.20.04.1).

The following packages were automatically installed and are no longer required:

libfwupdplugin1 libpython2-dev libpython2-stdlib libpython2.7 libpython2.7-dev libpython2.7-minimal libpython2.7-stdlib python2 python2-dev python2-minimal python2.7

python2.7-dev python2.7-minimal

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 34 not upgraded.

[2023-02-20 23:19:03] [WARN] [create_containers.sh] Container proxy already exist, skipping

[2023-02-20 23:19:03] [WARN] [create_containers.sh] Container postgres already exist, skipping

[2023-02-20 23:19:03] [INFO] [create_containers.sh] Creating siem of type es_siem (ubuntu 20.04)

Creating siem

waiting for network

[2023-02-20 23:19:11] [INFO] [create_containers.sh] Running setup from containers/es_siem

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

[...]

[2023-02-20 23:23:08] [INFO] [create_containers.sh] Configuring Elasticsearch and Kibana

[2023-02-20 23:23:08] [INFO] [create_containers.sh] Waiting for Kibana to be up&running (sleep 10s)

[2023-02-20 23:23:19] [INFO] [create_containers.sh] Waiting for Kibana to be up&running (sleep 10s)

[2023-02-20 23:23:51] [INFO] [create_containers.sh] Configuring journal for 'postgres'

[2023-02-20 23:23:52] [INFO] [dhis2-set-journal] Configuring postgres to log to journal

[2023-02-20 23:23:52] [INFO] [create_containers.sh] Configuring filebeat for 'postgres'

[2023-02-20 23:23:53] [INFO] [dhis2-set-elasticsearch] Retrieving filebeat 8.4.1 (arm64)

[2023-02-20 23:23:53] [INFO] [dhis2-set-elasticsearch] Installing filebeat

Selecting previously unselected package filebeat.

(Reading database ... 38331 files and directories currently installed.)

Preparing to unpack /tmp/filebeat.deb ...

Unpacking filebeat (8.4.1) ...

Setting up filebeat (8.4.1) ...

[2023-02-20 23:23:58] [INFO] [dhis2-set-elasticsearch] Configuring filebeat

Synchronizing state of filebeat.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable filebeat

Created symlink /etc/systemd/system/multi-user.target.wants/filebeat.service → /lib/systemd/system/filebeat.service.

[2023-02-20 23:24:01] [INFO] [dhis2-set-elasticsearch] Filebeat configured. All good

[2023-02-20 23:24:01] [INFO] [create_containers.sh] Configuring journal for 'proxy'

[2023-02-20 23:24:02] [INFO] [dhis2-set-journal] Configuring nginx to log to journal error logs and HTTP access logs

[2023-02-20 23:24:03] [INFO] [create_containers.sh] Configuring filebeat for 'proxy'

[2023-02-20 23:24:04] [INFO] [dhis2-set-elasticsearch] Retrieving filebeat 8.4.1 (arm64)

[2023-02-20 23:24:04] [INFO] [dhis2-set-elasticsearch] Installing filebeat

Selecting previously unselected package filebeat.

(Reading database ... 34741 files and directories currently installed.)

Preparing to unpack /tmp/filebeat.deb ...

Unpacking filebeat (8.4.1) ...

Setting up filebeat (8.4.1) ...

[2023-02-20 23:24:10] [INFO] [dhis2-set-elasticsearch] Configuring filebeat

Synchronizing state of filebeat.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable filebeat

Created symlink /etc/systemd/system/multi-user.target.wants/filebeat.service → /lib/systemd/system/filebeat.service.

[2023-02-20 23:24:13] [INFO] [dhis2-set-elasticsearch] Filebeat configured. All good

[2023-02-20 23:24:13] [INFO] [create_containers.sh] Configuring Kibana proxy access

[2023-02-20 23:24:14] [INFO] [create_containers.sh] Done configuring SIEM

[2023-02-20 23:24:14] [WARN] [create_containers.sh] Monitor container not existing or running. Skipping

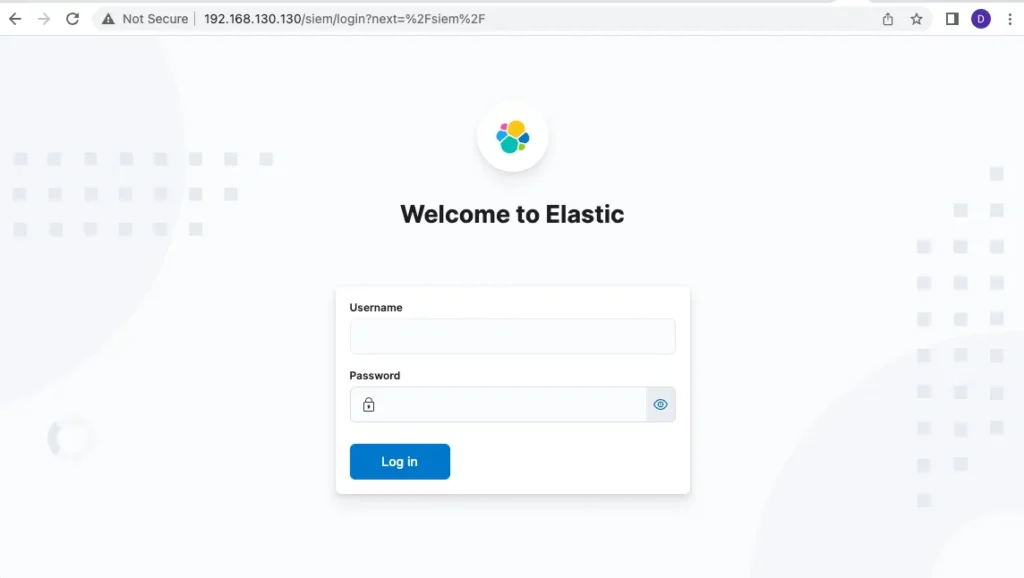

dab@battlechine:~/dhis2-tools-ng-dab/setup$If everything goes as expected, you should be able to reach the Kibana web page:

Features built-ins

You can get the credentials via get_creds :

dab@battlechine:~/dhis2-tools-ng-dab/setup$ source libs.sh

dab@battlechine:~/dhis2-tools-ng-dab/setup$ get_creds elasticsearch

{ "service": "elasticsearch", "username": "elastic", "password": "c_2odlH7BoNAB=juUUkg" }

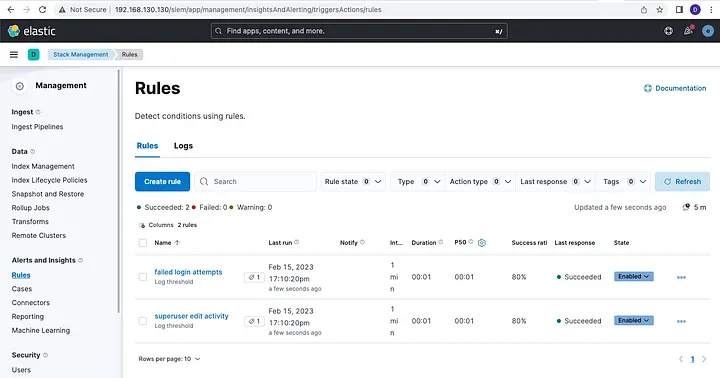

dab@battlechine:~/dhis2-tools-ng-dab/setup$The es_siem_postscript automatically configures the following features:

- A transformation routine that parse logs coming from containers into meaningful data;

- Two security rules to alert when 3 failing login attempts have been made against a DHIS2 instance;

- An index to store those alerts;

- Two dashboards: one for alerts and another for all logs.

Limitations

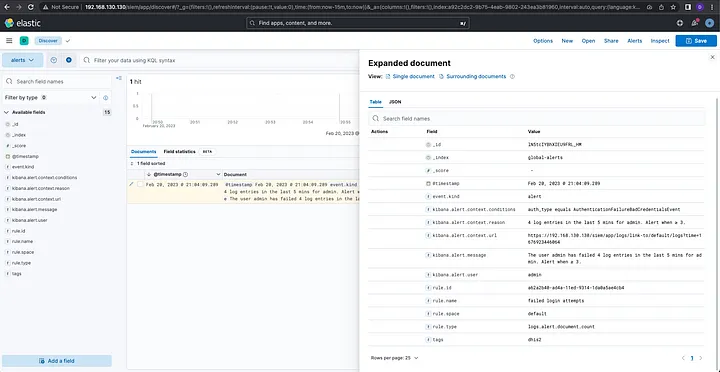

Let’s try to trigger the “failed login attempts” rule: I’ve made 4 unsuccessful login attempts for the user admin at a DHIS2 instance. After few seconds, we will see the alert triggered in the alert data view:

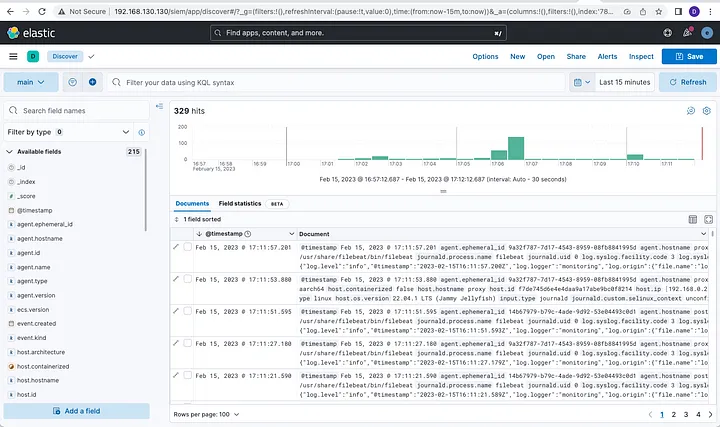

All logs can be seen in the main data view and tweaks can be to further filter data:

There are some limitations that come with this predefined setup.

First, its ease of setup and management comes at a cost: some of the most interesting features around security comes at a price of a license, which means another added cost.

The built in rules are great as a starting point, but the information available is sometimes limited. Each connector exposes different values that can be used to craft enrich an alert: just to give an example, the email and es query connectors expose the actual logs that trigger the alert, while for the log threshold connector, only the triggered values are reported.

You may want to explore free alternatives like the ElasticSearch forked version OpenSearch.

From a purely technical point of view, a SIEM, by its nature, stores logs coming from every container, and the more activity there is, the more logs are generated and sent to ElasticSearch. The outcome is an increase amount of disk storage to keep up with the database entries. There are several ways you can avoid that, beside the ones officially recommended:

- Increase the size of the disk storage;

- Use ElasticSearch sharding;

- Apply backup policy and store away unnecessary old entries.

Another issue regards how DHIS2 manages logs and log entries: it’s not always clear how the logging and audit systems behave, therefore some experimentation is needed. Appropriately assign log entries to their severity level is another issue DHIS2 should address to make logs useful and concise.

Beyond

Having a SIEM system is just the first step to a thorough detection and response platform.

We strongly recommend developing your own detection rules that tie into your environment and workflow.

If you want to share them with us, please do so by opening a ticket in our Github repository.

From here, you can think about adding Kibana connectors, to build complex workflows like with a webhook notification used to integrate into a messaging application like Slack, Telegram or WhatsApp to receive real-time alerts notification, or to kick of immediate actions through a SOAR.

If you need more information or need assistance with setting up DHIS2 SIEM automation, don’t hesitate to contact us!